PRC Adapts Meta’s Llama for Military and Security AI Applications

Publication: China Brief Volume: 24 Issue: 21

By:

Executive Summary:

- Researchers in the People’s Republic of China (PRC) have optimized Meta’s Llama model for specialized military and security purposes.

- ChatBIT, an adapted Llama model, appears to be successful in demonstrations in which it was used in military contexts such as intelligence, situational analysis, and mission support, outperforming other comparable models.

- Open-source models like Llama are valuable for innovation, but their deployment to enhance the capabilities of foreign militaries raises concerns about dual-use applications. The customization of Llama by defense researchers in the PRC highlights gaps in enforcement for open-source usage restrictions, underscoring the need for stronger oversight to prevent strategic misuse.

In September, the former deputy director of the Academy of Military Sciences (AMS), Lieutenant General He Lei (何雷), called for the United Nations to establish restrictions on the application of artificial intelligence (AI) in warfare (Sina Finance, September 13). This would suggest that Beijing has an interest in mitigating the risks associated with military AI. Instead, the opposite is true. The People’s Republic of China (PRC) is currently leveraging AI to enhance its own military capabilities and strategic advantages and is using Western technology to do so.

The military and security sectors within the PRC are increasingly focused on integrating advanced AI technologies into operational capabilities. Meta’s open-source model Llama (Large Language Model Meta AI) has emerged as a preferred model on which to build out features tailored for military and security applications. In this way, US and US-derived technology is being deployed as a tool to enhance the PRC’s military modernization and domestic innovation efforts, with direct consequences for the United States and its allies and partners.

PLA Experts’ Vision for Military AI

The PRC’s 2019 National Defense White Paper, titled “China’s National Defense for the New Era (新时代的中国国防),” notes that modern warfare is shifting toward increasingly informationized (信息化) and intelligentized (智能化) domains, demanding advances in mechanization, informationization, and AI development (Xinhua, July 24, 2019).

AI development in the military has accelerated in direct response to the demands of intelligent warfare, which itself has been propelled by recent technological advances. Experts from AMS and the People’s Liberation Army (PLA) have highlighted several key capabilities that AI systems must achieve to meet the PLA’s evolving military needs. First, large AI models must enable rapid response and decision-making to enhance battlefield situational awareness and support command functions. This includes autonomous mission planning and assisting commanders in making informed decisions under complex conditions. Strengthening the fusion of information from multiple sources is also seen as crucial, using AI to integrate data from satellite feeds, cyber intelligence, and communication intercepts. This is then used to deepen intelligence analysis and support joint operations, as highlighted by the PLA Joint Operation Outline (中国人民解放军联合作战纲要), which entered its trial implementation phase in 2020 (MOD, November 26, 2020). [1]

Military AI is also being applied extensively to cognitive and psychological warfare (China Brief, September 6, 2019; September 8, 2023; June 21). Generative AI models can be deployed to produce media content to influence narratives, conduct strategic influence campaigns, and undermine an adversary’s morale, according to AMS experts. [2] Large Language Models (LLMs) like ChatGPT can also rapidly integrate diverse information sources to enhance military intelligence analysis. With strong language processing capabilities, they can simplify data extraction, support real-time translation, and transform complex data into actionable insights, aiding military personnel in decision-making on the modern battlefield.

Experts within the military apparatus, including top defense industry players like the China Electronics Technology Group (CETC; 中国电子科技集团), are currently working on AI to enhance cybersecurity and network threat detection. One paper authored by employees at CETC argues that AI models can play a pivotal role in identifying and countering cyber threats and establishing robust early-warning systems to fortify military communication networks. [3] Another area of focus is predictive maintenance and supply chain management. Here, AI can be used to anticipate equipment failures and streamline supply logistics. This optimization is critical for the PLA’s sustained operational readiness, especially during prolonged engagements.

PLA experts are prioritizing the development of smaller, more “lightweight” AI models for deployment in resource-constrained environments like frontline operations. These models must be robust and capable of performing effectively with limited computational power, making them suitable for edge devices—small computers or sensors that can process data and operate without relying on distant servers. The Aiwu Large Model (艾武大模型) is a good example of this. According to one research paper, Aiwu offers cross-platform compatibility on both manned and unmanned systems, and can execute diverse mission tasks under challenging conditions. [4]

PLA experts consider military AI to be a foundational asset that must be highly adaptable, capable of integrating multimodal data and supporting autonomous decision-making in a wide range of tactical and strategic contexts. This approach stems from the understanding that future warfare will demand intelligent systems capable of processing real-time data, making proactive decisions, and synthesizing information from diverse sources. [5]

PRC Researchers Adapt Llama to Meet Military Demands

The potential that PRC researchers see in Meta’s Llama model lies in the flexibility and efficiency of its foundational model. This makes it useful for adaptation to various military and security systems. [6] A recent iteration, Llama 3.1, was launched in July and was described as having “capabilities that rival the best closed source models” (Meta, July 23). (Llama 3.2 was released on September 25, and so has not yet been treated in relevant research.) As an open-source model, developers and researchers can modify and innovate on top of it. PRC security researchers have focused on adapting it for multilingual dialogue, high-quality code generation, and complex mathematical problem-solving. [7] They argue that the transformer model—an architecture for deep learning models that powers text-generating models like OpenAI’s ChatGPT, Meta’s Llama, and Google’s Gemini—enhances performance in tasks such as information summarization, threat analysis, and decision-making support that are crucial for defense and public safety (ACM, December 4, 2017). [8]

PRC researchers have also identified several limitations in Llama that they believe should be addressed before it can be optimized for military use. A primary concern is that Llama relies on open-source training data, which is largely general-purpose and lacks specificity for military contexts. [9] This leads to biases and limited domain-specific knowledge, particularly in areas of military strategy and security operations. In addition, the scarcity of comprehensive Chinese-language data restricts Llama’s ability to fully grasp linguistic nuances in Chinese-language communication, including the cultural context of specific utterances. [10] To overcome these challenges, PLA experts have implemented different techniques involving advanced data collection, computational techniques, and algorithmic improvements. These efforts have enabled Llama to adapt to understand Chinese-language military terminology and tactics

Researchers Explore Techniques to Adapt Llama

PRC researchers have employed a number of strategies to fine-tune Llama for military and security applications. One approach involves constructing domain-specific datasets, which includes gathering military-specific dialogue records, building data corpora from classified documents, integrating real-time operational data, and incorporating direct feedback from military personnel. [11] Other techniques include Low-Rank Adaptation (LoRA), reinforcement learning, multimodal integration, and infrastructure upgrades.

LoRA has become a crucial strategy for customizing Llama (MIT, May 1). LoRA enables researchers to build specialized models based on Llama—for instance, by adding additional matrices to existing model layers to adjust the model’s responses in a targeted way, thereby negating the need to retrain the entire model (which could be time-consuming and expensive). This approach allows the model to retain its core abilities while adapting to military-specific terminology, coded signals, and context-sensitive decision-making with minimal resource use. This balance makes LoRA suitable for adapting Llama to meet the demands of military applications. [12]

Reinforcement learning involves fine-tuning models by reiterating training runs with tailored feedback to optimize responses. To further optimize Llama, researchers use Direct Preference Optimization (DPO), which involves presenting the model with examples of high- and low-quality responses, enabling it to learn to prioritize the former. Applied over a period of time, reinforcement learning allows Llama to adjust its responses dynamically and adapt to evolving situations. [13]

Researchers have optimized infrastructure through distributed computing and hybrid algorithms to meet Llama’s computational demands. Certain techniques allow large AI models to run efficiently on devices with limited processing power by carefully reducing model size, memory needs, and computational requirements. For example, quantization compresses the model by using fewer bits per parameter, which saves memory without much loss in performance; while the Mixture-of-Experts (MoE) approach activates only select portions of a model based on the specific task, so only relevant parts are engaged during processing, which makes computation faster and less resource-intensive (Researchgate, June). [14]

Some experts have applied techniques like Reinforcement Learning from Human Feedback (RLHF) to fine-tune LLMs to better align with human preferences in simulated environments. Specifically, RLHF helps models tailor their outputs to meet user expectations. In simulated military training exercises, this has optimized an LLM’s ability to perform scenario-specific tasks like decision-making and behavioral responses. This process also ensures the model’s alignment with specific communication and behavioral styles within these simulated contexts, enhancing the model’s realism and relevance for military operations. [15]

Llama-Based Models Deployed for Predictive Policing and Electronic Warfare

Experts from PRC’s security sector see Llama-based models as having huge potential to enhance smart policing (智慧警务). Specifically, these models could improve situational awareness and decision-making by streamlining administrative tasks and providing predictive insights to prioritize police responses. In theory, by extracting important information from reports, generating incident summaries, and classifying events, Llama can enable officers to focus more on handling emergencies in the field. Implementation of these techniques is currently being studied, for instance in Yueqing, Zhejiang Province. The model’s ability to process vast and various data sources, including social media posts, surveillance transcripts, and crime records, makes it an effective tool for proactive and data-driven law enforcement. [16]

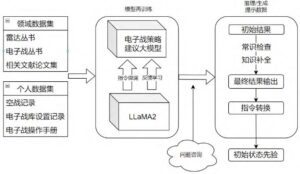

In the military domain, Llama is being studied to explore the potential for LLMs to support electronic warfare and self-defense jamming strategies, including by experts from the Aviation Industry Corporation (AVIC; 中国航空工业集团), one of the PRC’s top defense conglomerates. Integrated with reinforcement learning agents (AI models designed to learn how to make decisions by studying their environment and improving their actions to achieve a specific goal), Llama is used to implement a dual-level approach to maximize efficiency. At the strategic level, Llama determines optimal parameters for disrupting enemy radar systems, while reinforcement learning agents execute real-time tactical adjustments to counter adversary maneuvers. One simulation run by the AVIC researchers using Llama 2 as the base LLM demonstrated that this combined approach improved interference strategy reward scores by approximately 25–31 percent. In other words, the new LLM system led the agent to execute more successful jamming strategies and reduced ineffective actions. [17]

Experts from a science institute under the US-sanctioned China North Industries Group Corporation (NORINCO; 中国兵器工业集团有限公司) have been exploring the integration of LLMs, such as Meta’s Llama 2, into their computer-generated forces (CGF) simulations. From their perspective, these models can enhance autonomous decision-making, behavior simulation, and environmental adaptability. Strategically, LLMs process complex environmental data, enabling agents to make optimized, mission-aligned decisions. Tactically, they provide diverse behavioral scripts, allowing agents to execute coordinated actions and respond to real-time battlefield dynamics, including terrain, adversary movements, and situational threats. This dual-layered integration can create realistic simulation environments where agents autonomously adapt to dynamic conditions, thereby potentially improving PLA training performance and military operations. [18]

ChatBIT: A Llama-Derived Model for Military Intelligence

PLA-affiliated experts have been optimizing Meta’s Llama’s 13B model, which is based on Llama 2, for military and security purposes. This model, which features 13 billion parameters and a training corpus of one trillion tokens, outperforms GPT-3 on various benchmarks (Huggingface, October 28). The model was introduced by Meta researchers in early 2023 in the paper “Llama: Open and Efficient Foundation Language Models” (Arxiv, February 27, 2023).

Using Llama-13B as a foundation, researchers from AMS have developed “ChatBIT,” a model tailored for open-source intelligence (OSINT) and military dialogue tasks. [19] ChatBIT appears to be a powerful model, outperforming Vicuna-13B—another Llama-13B-based model developed at Stanford University that allegedly achieved roughly 90 percent of GPT-4’s performance—on military-relevant benchmarks such as BLEU and ROUGE that assess translation accuracy and summarization quality (LMSYS Org, March 30, 2023; Elastic, December 1, 2023). Comparative evaluations consistently suggest that ChatBIT outperforms models like Vicuna-13B on these metrics, highlighting its effectiveness in accurately interpreting nuanced military contexts and ensuring high-fidelity responses. [20]

AMS researchers provide an example of ChatBIT outperforming other models on pertinent tasks. For instance, when asked about the United States Army Research Laboratory, ChatBIT delivered a comprehensive and accurate response detailing the lab’s focus on AI, cybersecurity, and drone technology. Vicuna-13B, the model it was tested against, provided an incorrect response. [21] Similarly, when tasked with explaining “combat power contribution rate and combat effectiveness correlation”—metrics for evaluating weapons systems—ChatBIT offered an in-depth analysis, whereas Vicuna-13B only gave a superficial explanation. AMS experts argue that ChatBIT’s impressive performance in these tasks demonstrates its readiness for deployment in military Q&A, situational analysis, and operational support tasks.

Conclusion

Meta initially released Llama as an open-source model to support research and non-commercial use; however, the PLA’s adaptation of Llama to build models such as ChatBIT illustrates the problems of a trust-based approach to releasing technology into the global commons. Although Llama comes with license agreements that expressly forbid using the software for military purposes, these have proven ineffective in preventing the PLA and affiliated researchers from doing exactly that (Huggingface, accessed October 28). In practice, these restrictions act as “paper tigers”—stipulations that lack enforceability and fall short of holding entities like the PLA accountable.

The PRC has adapted Meta’s Llama for military and security purposes that are improving the country’s national defense capabilities. Through advanced techniques like LoRA, reinforcement learning, and multimodal integration, PRC researchers have transformed Llama into a tool to meet the intricate demands of military and public security applications. This work underscores the PRC’s commitment to developing AI systems that are integrated, adaptive, and capable of autonomous operation across diverse applications, from predictive policing to electronic warfare.

Open-source LLMs like Llama can accelerate innovation and provide valuable tools across sectors, but they also pose risks if not carefully managed. Without oversight, such technologies can be modified for malicious purposes and used in ways that are contrary to developers’ original intentions. These technologies’ dual potential for both innovation and misuse highlights the importance of balanced oversight to maximize the benefits of open-source AI while mitigating risks associated with strategic misapplication.

Notes

[1] Zhang Long [张龙], Lei Zhen [雷震], Feng Xuanming [冯轩铭], Yan Xiaopei [阎晓培], Chen Renping [陈仁平]. “Military MLLMs (Multimodal Large Language Models): Applied Analysis, Key Technologies, and Evaluation System Framework.” [军事大模型: 应用分析、关键技术和评估体系框架], 2024.

[2] Zhao Qingtian [赵擎天], Liwei Li [李立伟], Xin Chen [陈鑫], Lizhi Hou [侯立志]. “Requirements and Enlightenment about ChatGPT in Military Applications.” [ChatGPT+军事应用需求与启示]

[3] Ji Pengfei [季鹏飞], Hua Songyi [华松逸], Zhang Yuchen [张煜晨], Xiao Mengmeng [肖蒙蒙], Yu Bingchen [余炳晨]. “Current Development Status of Military Large Models and Analysis of Computing Infrastructure Requirements.” [军事大模型发展现状与算力基础设施需求分], Journal of Dual Use Technologies & Products [军民两用技术与产品], June 2024, Issue No. 488.

[4] Cui Xiaolong [崔翛龙], Gao Zhiqiang [高志强], Ji Weitong [姬纬通], Shen Jianan [沈佳楠], Zhang Min [张敏], Qiu Xinyuan [邱鑫源]. “Aiwu Large Model+: Development and Empirical Study of Military Large Model System.” [“艾武大模型+”:一种军事大模型系统的开发与实证], Journal of Data Acquisition and Processing [数据采集与处理], Vol. 39, No. 3, May 2024, pp. 588–597.

[5] Zhang Long [张龙], Lei Zhen [雷震], Feng Xuanming [冯轩铭], Yan Xiaopei [阎晓培], Chen Renping [陈仁平]. “Military MLLMs (Multimodal Large Language Models): Applied Analysis, Key Technologies, and Evaluation System Framework.” [军事大模型: 应用分析、关键技术和评估体系框架], 2024.

[6] Huang Jie [黄洁]. “Insights of Llama 3.1 for the Development of China’s AIGC Industry.” [Llama 3.1对我国AIGC产业发展的启示], China Outsourcing, 2024, Issue 08.

[7] Xu Weijun [徐卫军], Deng Hongfei [邓宏飞], Jia Yaofeng [贾耀锋]. “Exploration and Practice of Large Model Technology in Smart Policing.” [大模型技术在智慧警务的探索与实践], China Security and Protection [中國安防], June 2024.

[8] Ibid.; Zhao Qingtian [赵擎天], Liwei Li [李立伟], Xin Chen [陈鑫], Lizhi Hou [侯立志]. “Requirements and Enlightenment about ChatGPT in Military Applications.” [ChatGPT+军事应用需求与启示]

[9] Cheng Shi [程式], Ye Chunyang [叶春阳]. “Challenges and Reflections on the Application of Artificial Intelligence Technology in the Construction of ‘Smart Public Security’—Based on the Practical Exploration of Yueqing Police Force.” [“ 智慧公安” 建设中人工智能技术应用的挑战与思考———基于乐清公安的实践探索], Journal of Zhejiang Police College [浙江警察学院学报], August 2024, No. 4, Ser. No. 204.

[10] Cui Xiaolong [崔翛龙], Gao Zhiqiang [高志强], Ji Weitong [姬纬通], Shen Jianan [沈佳楠], Zhang Min [张敏], Qiu Xinyuan [邱鑫源]. “Aiwu Large Model+: Development and Empirical Study of Military Large Model System.” [“艾武大模型+”:一种军事大模型系统的开发与实证], Journal of Data Acquisition and Processing [数据采集与处理], Vol. 39, No. 3, May 2024, pp. 588–597.

[11] Xu Weijun [徐卫军], Deng Hongfei [邓宏飞], Jia Yaofeng [贾耀锋]. “Exploration and Practice of Large Model Technology in Smart Policing.” [大模型技术在智慧警务的探索与实践], China Security and Protection [中國安防], June 2024.

[12] Zhang Huaping [张华平], Li Chunjin [李春锦], Wei Shunping [魏顺平], Geng Guotong [耿国桐], Li Weiwei [李伟伟], and Li Yugang [李玉岗], “Large Language Model-Driven Open-Source Intelligence Cognition” [“大语言模型驱动的开源情报认知认领”], National Defense Technology [国防科技], March 2024. 3.

[13] Peng Haojie [彭皓杰], Zhang Wengyu [张文宇], Chen Ruihai [陈锐海], Zhang Qiyue [张启悦]. “Optimization of Air Combat Self-Defense Jamming Strategy Training Driven by Large Language Models.” [“大语言模型驱动的空战自卫干扰策略训练优化”], Journal of Detection & Control [探测与控制学报], October 2024.

[14] Cheng Shi [程式], Ye Chunyang [叶春阳]. “Challenges and Reflections on the Application of Artificial Intelligence Technology in the Construction of ‘Smart Public Security’—Based on the Practical Exploration of Yueqing Police Force.” [“ 智慧公安” 建设中人工智能技术应用的挑战与思考———基于乐清公安的实践探索], Journal of Zhejiang Police College [浙江警察学院学报], August 2024, No. 4, Ser. No. 204.

[15] Lee, Guangyun [李广运], Chen, Delei [陈德雷], Yuan, Yafei [袁亚飞]. “Research on the Application of Large Language Model in Computer-Generated Forces.” [大模型在计算机生成兵力中的应用研究] The Sixth Academic Conference on Systems Engineering, Yunan, China, August 2024.; Zhang Long [张龙], Lei Zhen [雷震], Feng Xuanming [冯轩铭], Yan Xiaopei [阎晓培], Chen Renping [陈仁平]. “Military MLLMs (Multimodal Large Language Models): Applied Analysis, Key Technologies, and Evaluation System Framework.” [军事大模型: 应用分析、关键技术和评估体系框架], 2024

[16] Xu Weijun [徐卫军], Deng Hongfei [邓宏飞], Jia Yaofeng [贾耀锋]. “Exploration and Practice of Large Model Technology in Smart Policing.” [大模型技术在智慧警务的探索与实践], China Security and Protection [中國安防], June 2024; Cheng Shi [程式], Ye Chunyang [叶春阳]. “Challenges and Reflections on the Application of Artificial Intelligence Technology in the Construction of ‘Smart Public Security’—Based on the Practical Exploration of Yueqing Police Force.” [“智慧公安” 建设中人工智能技术应用的挑战与思考———基于乐清公安的实践探索], Journal of Zhejiang Police College [浙江警察学院学报], August 2024, No. 4, Ser. No. 204.

[17] Peng Haojie [彭皓杰], Zhang Wengyu [张文宇], Chen Ruihai [陈锐海], Zhang Qiyue [张启悦]. “Optimization of Air Combat Self-Defense Jamming Strategy Training Driven by Large Language Models.” [“大语言模型驱动的空战自卫干扰策略训练优化”], Journal of Detection & Control [探测与控制学报], October 2024.

[18] Lee, Guangyun [李广运], Chen, Delei [陈德雷], Yuan, Yafei [袁亚飞]. “Research on the Application of Large Language Model in Computer-Generated Forces.” [大模型在计算机生成兵力中的应用研究] The Sixth Academic Conference on Systems Engineering, Yunan, China, August 2024.

[19] Zhang Huaping [张华平], Li Chunjin [李春锦], Wei Shunping [魏顺平], Geng Guotong [耿国桐], Li Weiwei [李伟伟], and Li Yugang [李玉岗], “Large Language Model-Driven Open-Source Intelligence Cognition” [“大语言模型驱动的开源情报认知认领”], National Defense Technology [国防科技], March 2024. 3.

[20] Ibid.

[21] Ibid.